Comparison of Recurrent Neural Networks for Slovak Punctuation Restoration

Daniel Hládek, Ján Staš and Stanislav Ondáš

Technical University of Košice

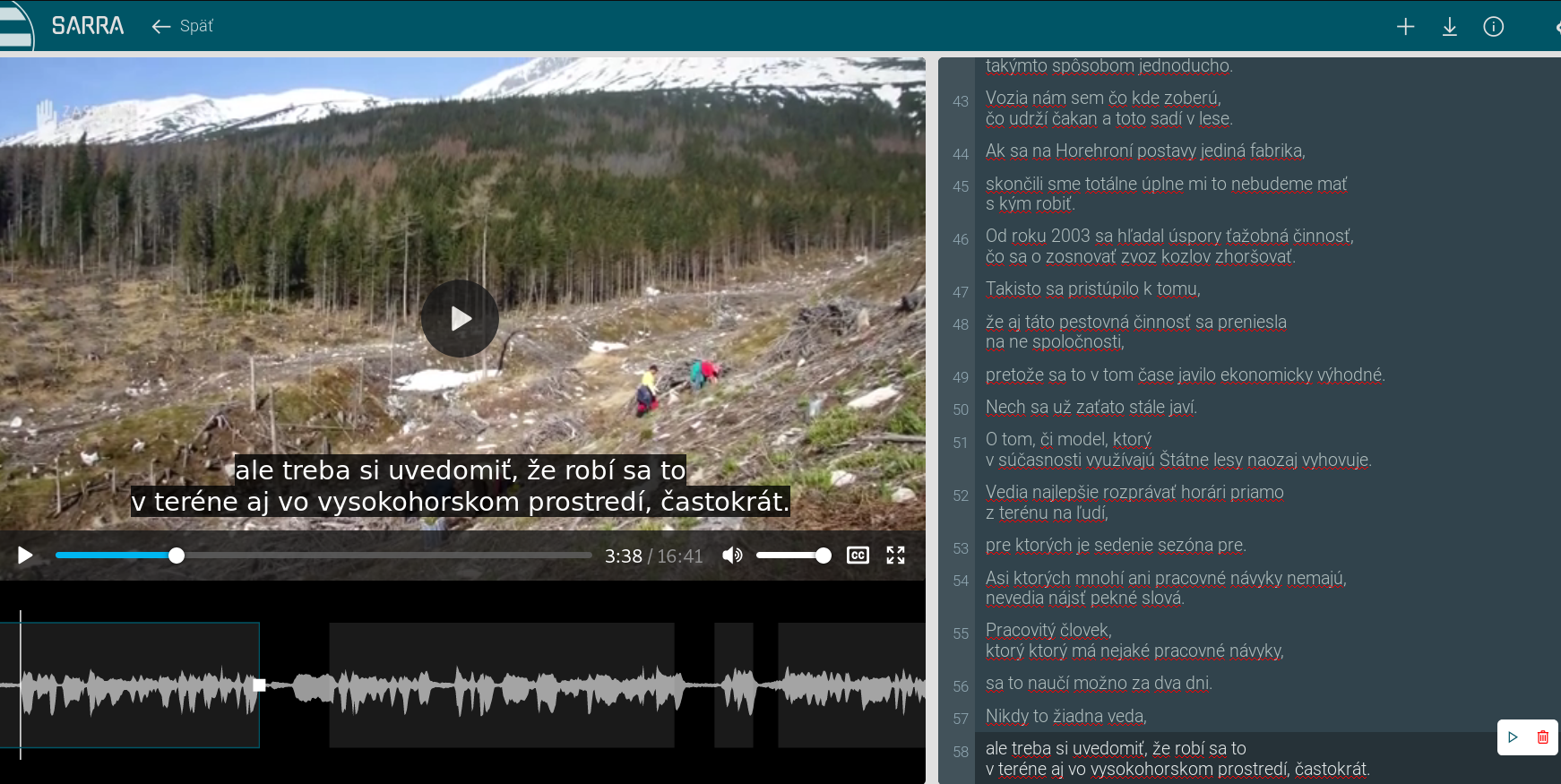

Background

- Subtitles for the hearing disabled

- Legal dictation system

Background

Motivation

Post-processing of the output of the speech recognition system.

where to add punctuation in subtitles

^ ^

| |Punctuation restoration

adds missing punctuation:

- comma, period

- question mark, exclamation, colon, semicolon,

Context to class mapping

context to class mapping

ahoj ako sa máš -> ?

.

,The training data

stovky miliárd korún . dnes vo fonde , kde sa potrebné peniaze

stovky miliárd korún -> PERIOD

miliárd korún . -> NO

korún . dnes -> NO

dnes vo fonde -> COMMA

vo fonde , -> NOContext

ahoj ako sa máš words

12 56 78 123 integers

0.21 0.34 0.35 0.87 embedding

0.01 0.35 0.45 0.51 vectors

0.61 0.74 0.15 0.87The recurrent neural network model

maps a matrix of embedding vectors into possible punctuation marks.

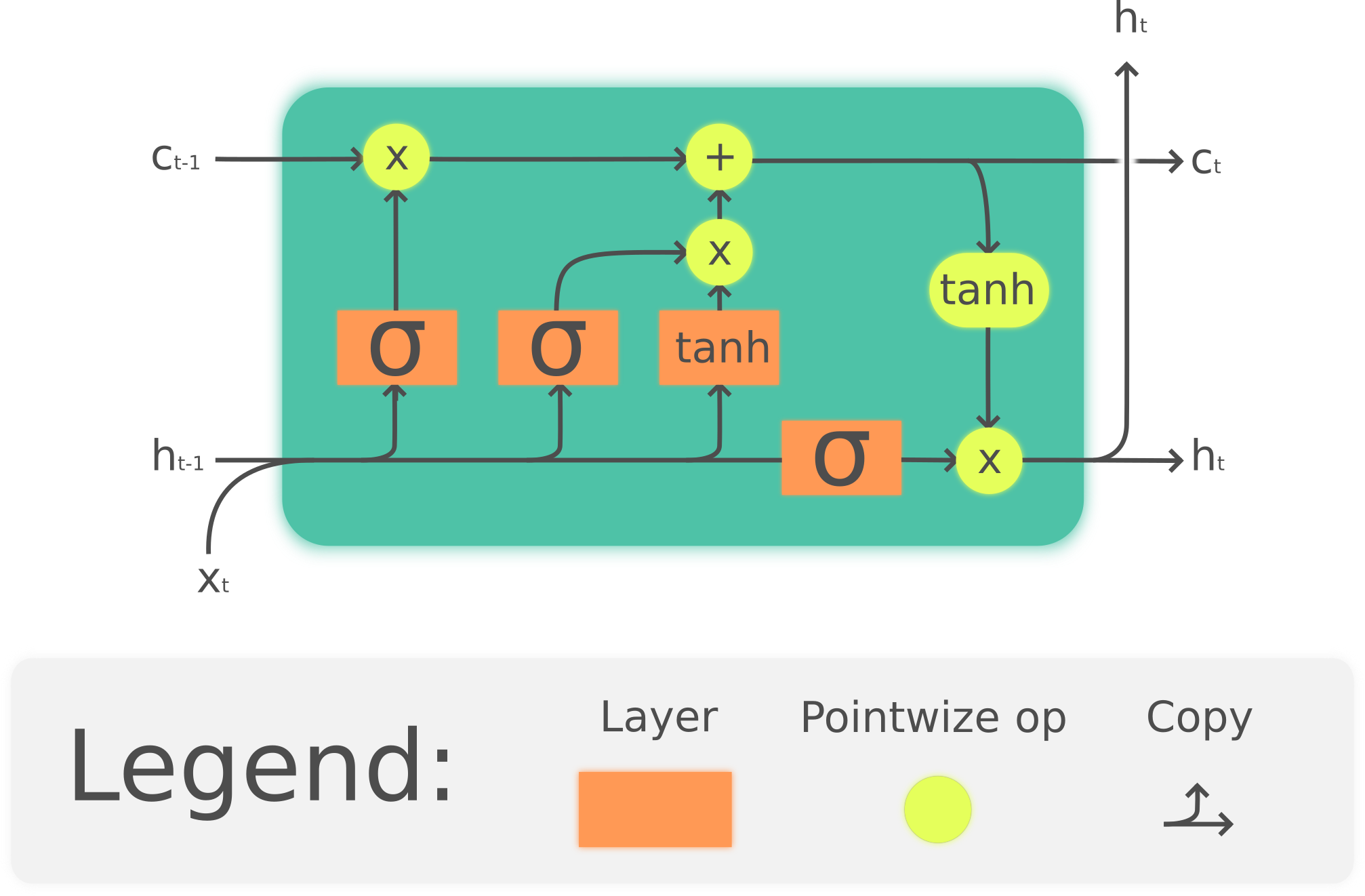

- long-short term memory (LSTM)

- gated recurrent unit (GRU)

RNN Architecture

- Input Layer: 20

- Embedding: 300

- Recurrent Layer (LSTM or GRU): 100

- Output Softmax Layer: 3

LSTM*

By Guillaume Chevalier - Own work, CC BY 4.0, https://commons.wikimedia.org/w/index.php?curid=71836793

The training data size

| Dataset | # Tokens | Sentences | Size (B) |

|---|---|---|---|

| train | 43,693,819 | 2,587,896 | 305,660,294 |

| test | 6,168 | 346 | 43,372 |

Uni-Directional Results

After 20 rounds of learning

| Network | Comma F1 | Period F1 |

|---|---|---|

| LSTM | 0.81 | 0.65 |

| GRU | 0.81 | 0.67 |

Bi-Directional Results

After 10 rounds of learning

| Network | Comma F1 | Period F1 |

|---|---|---|

| LSTM | 0.82 | 0.68 |

| GRU | 0.88 | 0.86 |

Problems

- Overtraining after 20 rounds

- Slow network training (several days)

Future research

- Dropout

- Convolutional networks

Future research

https://marhula.fei.tuke.sk/sarra/

https://nlp.kemt.fei.tuke.sk